Designing and deploying artificial intelligence for an inclusive future of work

On April 7, 2025, as a part of a speaker series, Dr. Wendy Cukier, Founder and Academic Director of the Diversity Institute presented on artificial intelligence and the digital divide.

As part of its ongoing equity, diversity and inclusion (EDI) speaker series, University of Toronto Press hosted a virtual session on the growing impact of artificial intelligence (AI) on equity in the workforce. Led by Dr. Wendy Cukier, Founder and Academic Director of Toronto Metropolitan University’s Diversity Institute (DI), the presentation, “AI and the Digital Divide: Opportunities and Risks,” explored how AI is reshaping sectors, highlighting both the opportunities it offers and the risks it poses to equity-deserving groups. Drawing on Canadian data, real-world examples and research, the session emphasized the urgent need for responsible, inclusive adoption of AI technologies in a rapidly evolving digital landscape.

Despite its global reputation as a leader in AI innovation—shaped by figures like Nobel Prize winner Geoffrey Hinton and deep learning pioneer Yoshua Bengio—Canada continues to lag in AI adoption. With more than (PDF file) $2.57 billion invested (external link) in AI and a 57% year-over-year increase in AI patent filings, the country’s research and development output is strong. However, only 35% of Canadian businesses are currently using AI, compared to 72% in the U.S.

A recent DI report created in partnership with the Environics Institute (external link) and the Future Skills Centre (external link) found that barriers to adoption include a lack of AI skills and low awareness of tools, particularly among small and medium-sized enterprises (SMEs). Additional challenges include regulatory complexity (with 60% citing difficulty keeping up with rules), ethical concerns, intellectual property disputes and scepticism around return on investment. Still, the gender gap in familiarity with AI is narrower than with other technologies - with 47% of women and 53% of men reporting familiarity with AI tools—signalling a potential path to more inclusive digital transformation. Generative AI is “the English major’s revenge” opening up pathways for people without a background in coding. Because of disciplinary segregation for example, fewer women in computer science and engineering, and the need for more perspectives to advance AI adoption and responsible use, AI can be a significant bridge in the digital divide.

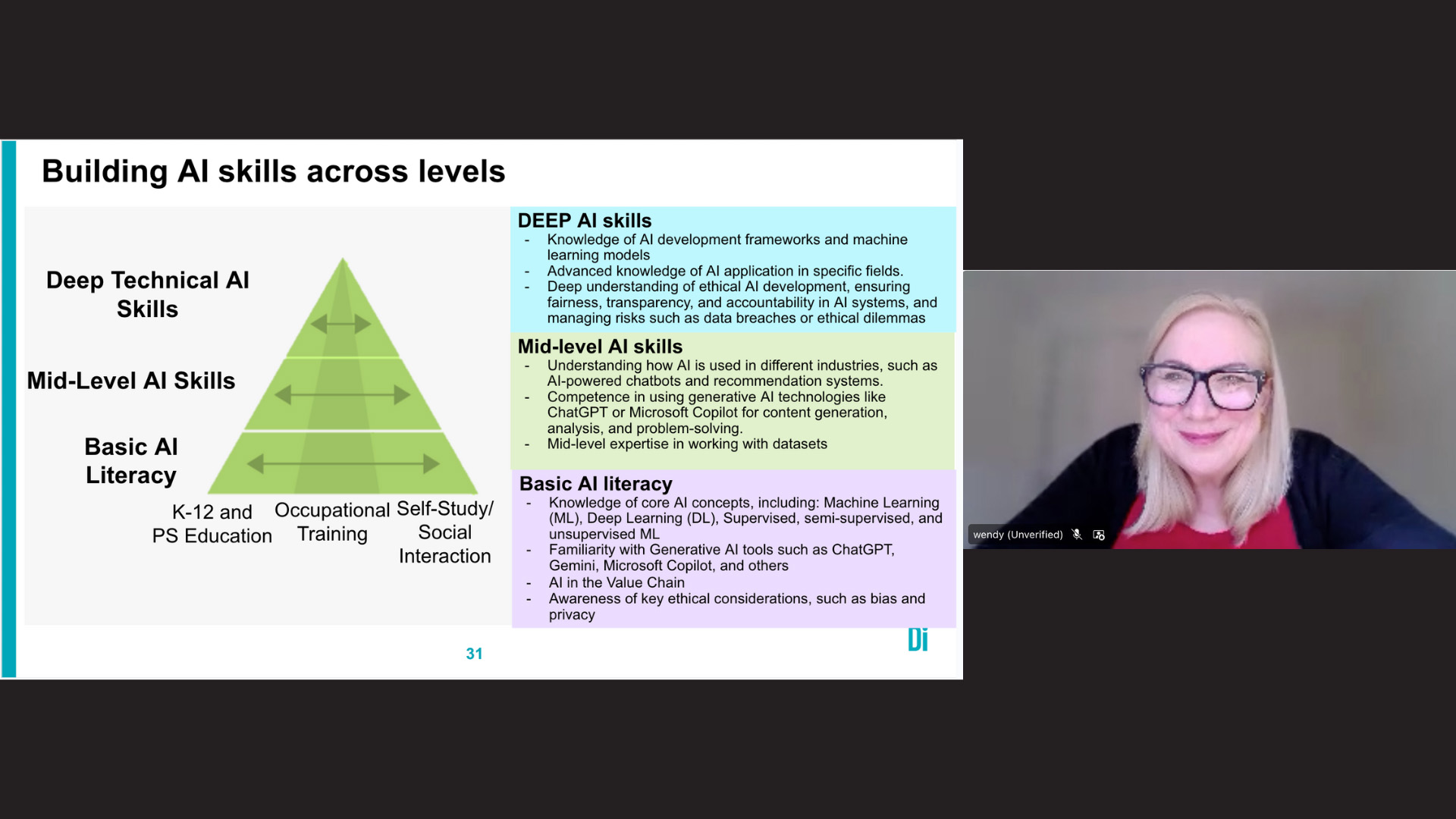

The Diversity Institute created an AI literacy framework outlining three tiers of skills: deep AI skills for developing machine learning models; mid-level skills for effectively using generative AI tools like ChatGPT and Microsoft Copilot; and basic AI literacy, which includes understanding these tools and recognizing ethical issues such as bias and privacy. While not everyone needs deep technical expertise, Cukier stressed the importance of AI literacy across all roles to drive digital transformation and pointed to training programs like Advanced Digital and Professional Training (ADaPT), as well as courses from Google and Microsoft, which offer pathways to build these competencies.

Artificial intelligence trends in publishing

In the publishing sector, AI is rapidly transforming workflows, from editing to distribution. Cukier pointed to data showing that 40% of publishers (external link) are now using AI tools for editing and proofreading, streamlining grammar checks and style consistency. It is also driving data-informed book design, generating layouts and covers that align with market trends. In marketing, predictive analytics and recommendation engines help publishers personalize campaigns and forecast reader interests. On the logistics side, AI is optimizing distribution routes and predicting delays, improving efficiency and reducing costs across the supply chain. This wave of AI adoption marks a broader shift in the publishing industry—one that is redefining roles and forcing organizations to adapt.

While AI presents opportunities for efficiency and innovation, Cukier warned that it also carries significant risks—including accuracy issues, hallucinations, data privacy concerns, intellectual property conflicts and regulatory challenges—particularly for SMEs. One of the most critical concerns is bias, as AI systems trained on flawed or non-representative data can reinforce existing inequalities. Bias can stem from historical patterns in training data, skewed feature selection and a lack of transparency, making it difficult to detect or correct discriminatory outcomes. “We cannot allow AI to be completely autonomous. It has to have human oversight,” she said. Without proper oversight, these systems risk perpetuating harm, especially in areas like hiring or lending. As AI becomes more embedded in business operations, Cukier stressed the need for responsible governance that prioritizes fairness, security and inclusivity.

Building guardrails and mitigating bias

To ensure AI systems are fair, inclusive and trustworthy, Cukier emphasized the need for strong guardrails and proactive measures to mitigate bias. This includes embedding values like transparency, accountability and privacy into AI design from the outset, conducting regular bias audits and maintaining human oversight to catch errors and correct cultural assumptions—many of which reflect a narrow, North American lens. Large language models must be trained to understand cultural nuances, recognize global English variants and interact equitably with users across diverse linguistic and social contexts. Rather than stifling innovation, these safeguards enable responsible experimentation and ensure AI systems serve a wider, more inclusive population while remaining aligned with evolving legal standards.

Cukier closed the session by underscoring that AI, when deployed responsibly, can be a powerful tool for advancing EDI across sectors. Programs like the Advanced Digital and Professional Training (ADaPT)—which provides no-cost training to early talent, including newcomers and under-represented groups—demonstrate how building AI literacy and embedding responsible practices into organizational culture can drive both innovation and inclusion. Cukier emphasized that inclusive AI is essential. It requires intentional design, targeted training and sustained oversight to ensure the technology serves everyone.