How to program a better tomorrow: Harnessing disruptive technologies

Innovation Issue 38: Summer 2023

Harnessing the power of AI to make digital music more accessible

Culture

Harnessing the power of AI to make digital music more accessible

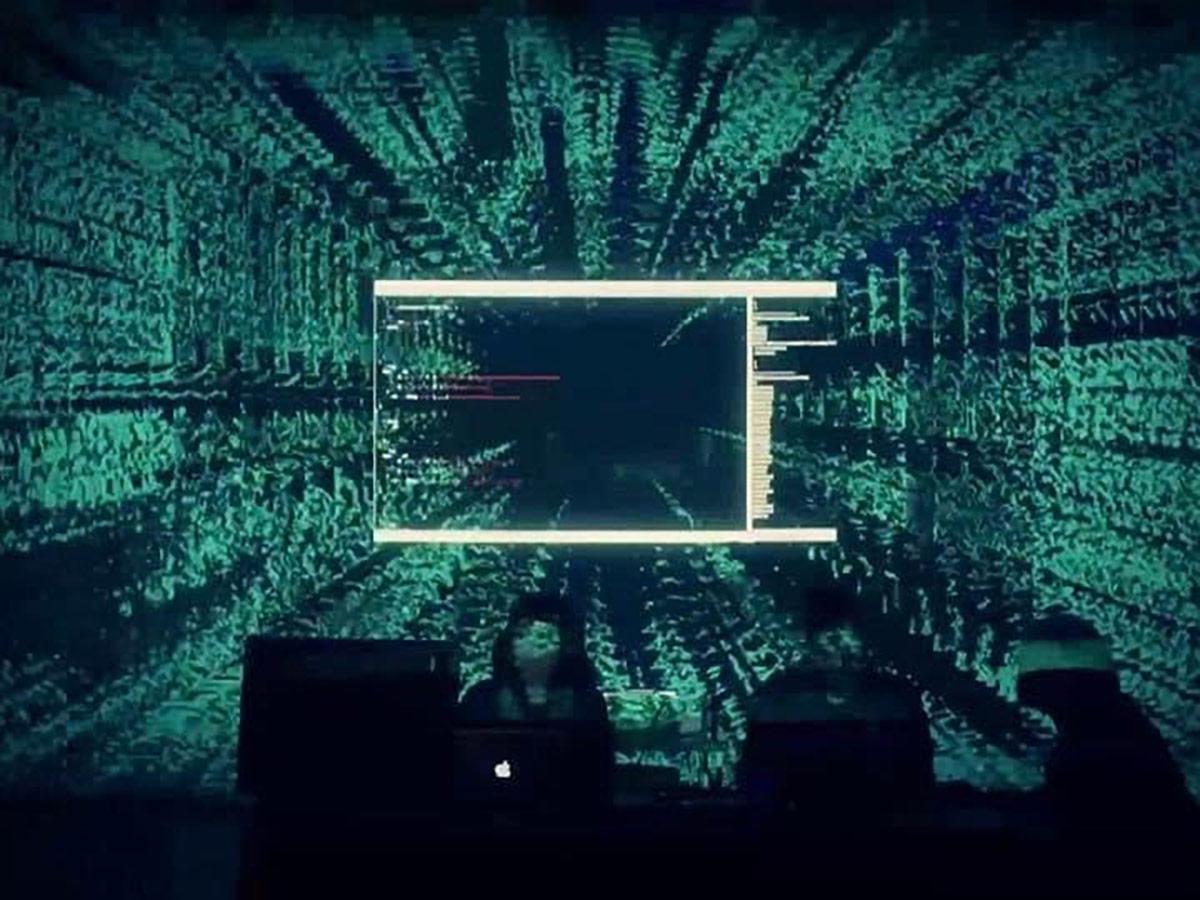

During a live coding performance, composers create music in real time. Both the code used to create the music and digital visualizations of the music are projected on a screen behind them. Photo: Norah Lorway.

A human performer writes code on a laptop computer, displayed on a screen behind them, instantaneously creating patterns of sound for live audiences to hear, see and experience. This kind of performance is live coding, a form of improvised digital music composition that manipulates computer code to create music in real time.

Live coding can be an immersive, interactive way to compose and consume music, but it comes with a high barrier to entry for composers who aren’t programmers. To make live coding more accessible, Norah Lorway, a professional music professor in The Creative School at Toronto Metropolitan University (TMU), created Scorch.

Scorch is a commercially available programming language that incorporates AI and machine learning and facilitates collaboration between a live coding composer and AI. It is similar to a software plug-in and works with a variety of digital audio workstations, allowing composers to use their existing sound libraries.

“The idea behind the coding is that users can make any type of pattern, any type of beat because of the freedom of the code,” said professor Lorway.

Scorch was designed to create an accessible entry point for composers who do not have significant programming knowledge and who may have been traditionally under-represented in the programming space, like women and people of colour. It can also be used with screen readers for composers with visual impairments. Scorch uses machine learning, trained on simple code written by the user, to autocomplete code, suggest variations of code or produce entirely new programs. The AI in Scorch also helps users fix errors in the code to live code a performance more quickly.

The idea for Scorch came from one of professor Lorway’s previous collaborative research projects. In that project, the goal was to create a bot that would help professor Lorway compose live coding performances more easily as a solo artist while also allowing her to interact with another entity. The result was Autopia, a gamified system that facilitates collaboration between human composers and AI and integrates audience participation.

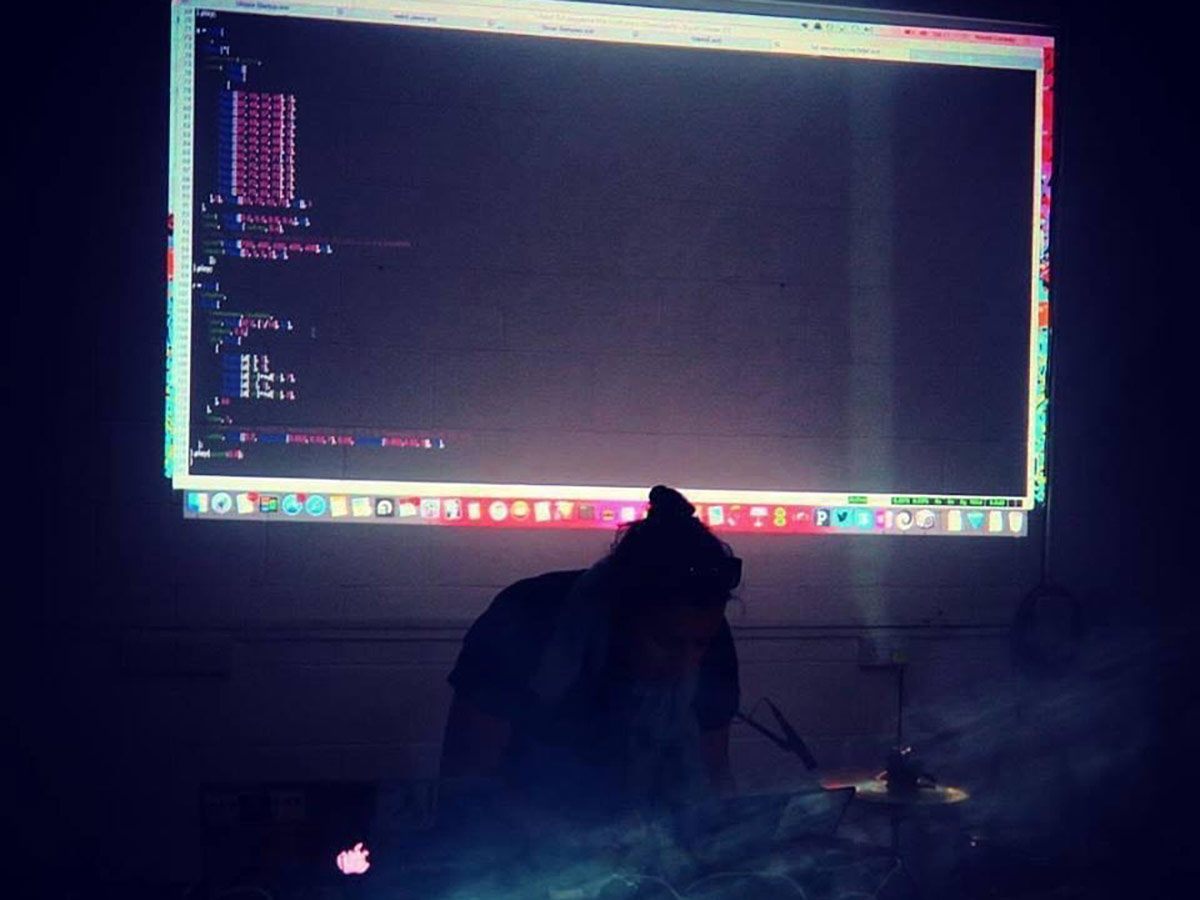

The Creative School professor Norah Lorway incorporates AI in live coding music composition. As she performs, the code used to create the music is displayed on a screen behind her. Photo: Norah Lorway.

During a live coding performance using Autopia, audience members use a web-based app to vote on the beats and patterns of sound they enjoy. Autopia takes audience feedback and plays the sounds with the highest votes. Professor Lorway is now working on rewriting Autopia with the Scorch programming language to make it more accessible outside academia.

While Scorch and Autopia allow live coding composers to create music quicker and with less technical knowledge, professor Lorway’s advice for musicians and artists working with AI is to maintain the human element.

“With music, you do need that human kind of critical thinking to get involved with the process,” said professor Lorway. “People often forget about biases in AI itself.”

The idea behind the coding is that users can make any type of pattern, any type of beat because of the freedom of the code.

Watch a demo performance using Autopia (external link, opens in new window) .

Read more about Scorch (external link, opens in new window) and The Scorch Manifesto (external link, opens in new window) .